U0204438 Huang Shichao Alvin

Traditionally, autonomous robots have been designed to perform hazardous and/or repetitive tasks. However, a new range of domestic applications such as household chores and entertainment is driving the development of robots that can interact and communicate with humans around them. At present, most domestic robots are restricted to communicating with humans through pre-recorded messages. Communication amongst humans, however, entails much more than just the spoken word, including other aspects such as facial expression, body posture, gestures, gaze direction and tone of the voice.

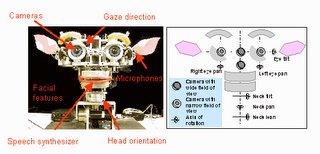

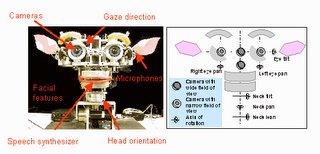

The Sociable Machines Project in MIT has developed an expressive anthropomorphic robot called Kismet that "engages people in natural and expressive face-to-face interaction". To do this, Kismet takes in visual and audio cues from the human interacting with it through 4 colour CCD cameras mounted on a stereo active vision head and a small wireless microphone worn by the human. Kismet has 3 degrees of freedom to control gaze direction and 3 degrees of freedom to control its neck, allowing it to move and orient its eyes like a human, which not only increases the visual perception mechanism but also allows it to use its gaze direction as a communication tool. Kismet has a 15 degree of freedom face that can display a wide assortment of facial expressions, as seen in the picture. This allows it to display various emotions through movement of its eyelids, ears and lips. Lastly, it also has a vocalization system generated through an articulatory synthesizer.

In terms of the behaviour of the robot, the system architecture consists of 6 sub-systems: low-level feature extraction, high-level perception, attention, motivation, behaviour and motor systems. Using these systems, the visual and audio cues it receives are classified using the extraction and perception systems. Using the attention, motivation and behaviour systems, the next action taken by the robot is calculated and executed using the motor system. For more information, please refer to

here

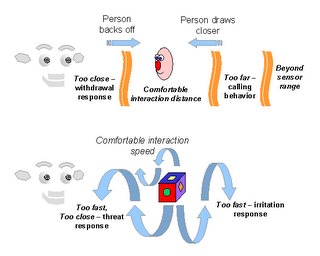

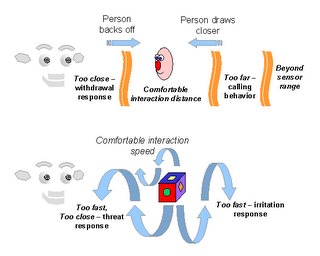

Kismet exhibits several human-like behaviours, which are modeled on the behaviour of an infant, such as moving closer towards an object it is interested in by moving its neck or engaging in a calling behaviour to cause the object to move nearer. It also changes its facial expressions according to whether the visual and audio stimuli are causing it to "feel" happy, sad, etc. Videos of these interactions can be seen

here. The use of infant behaviours are meant to simulate parent-infant exchanges to simulate socially situated learning with a human instructor.

Kismet represents the next step in human-robot interaction, where robots will share a similar morphology to humans and thus communicate in a manner that supports the natural mode of communication of humans. This will lead to more intuitive and "friendly" designs for robots that will allow them to be more easily accepted by humans as robots become more and more ubiquitous in our lives.

Link to Kismet homepage

Traditionally, autonomous robots have been designed to perform hazardous and/or repetitive tasks. However, a new range of domestic applications such as household chores and entertainment is driving the development of robots that can interact and communicate with humans around them. At present, most domestic robots are restricted to communicating with humans through pre-recorded messages. Communication amongst humans, however, entails much more than just the spoken word, including other aspects such as facial expression, body posture, gestures, gaze direction and tone of the voice.

Traditionally, autonomous robots have been designed to perform hazardous and/or repetitive tasks. However, a new range of domestic applications such as household chores and entertainment is driving the development of robots that can interact and communicate with humans around them. At present, most domestic robots are restricted to communicating with humans through pre-recorded messages. Communication amongst humans, however, entails much more than just the spoken word, including other aspects such as facial expression, body posture, gestures, gaze direction and tone of the voice.

The Sociable Machines Project in MIT has developed an expressive anthropomorphic robot called Kismet that "engages people in natural and expressive face-to-face interaction". To do this, Kismet takes in visual and audio cues from the human interacting with it through 4 colour CCD cameras mounted on a stereo active vision head and a small wireless microphone worn by the human. Kismet has 3 degrees of freedom to control gaze direction and 3 degrees of freedom to control its neck, allowing it to move and orient its eyes like a human, which not only increases the visual perception mechanism but also allows it to use its gaze direction as a communication tool. Kismet has a 15 degree of freedom face that can display a wide assortment of facial expressions, as seen in the picture. This allows it to display various emotions through movement of its eyelids, ears and lips. Lastly, it also has a vocalization system generated through an articulatory synthesizer.

The Sociable Machines Project in MIT has developed an expressive anthropomorphic robot called Kismet that "engages people in natural and expressive face-to-face interaction". To do this, Kismet takes in visual and audio cues from the human interacting with it through 4 colour CCD cameras mounted on a stereo active vision head and a small wireless microphone worn by the human. Kismet has 3 degrees of freedom to control gaze direction and 3 degrees of freedom to control its neck, allowing it to move and orient its eyes like a human, which not only increases the visual perception mechanism but also allows it to use its gaze direction as a communication tool. Kismet has a 15 degree of freedom face that can display a wide assortment of facial expressions, as seen in the picture. This allows it to display various emotions through movement of its eyelids, ears and lips. Lastly, it also has a vocalization system generated through an articulatory synthesizer. In terms of the behaviour of the robot, the system architecture consists of 6 sub-systems: low-level feature extraction, high-level perception, attention, motivation, behaviour and motor systems. Using these systems, the visual and audio cues it receives are classified using the extraction and perception systems. Using the attention, motivation and behaviour systems, the next action taken by the robot is calculated and executed using the motor system. For more information, please refer to here

In terms of the behaviour of the robot, the system architecture consists of 6 sub-systems: low-level feature extraction, high-level perception, attention, motivation, behaviour and motor systems. Using these systems, the visual and audio cues it receives are classified using the extraction and perception systems. Using the attention, motivation and behaviour systems, the next action taken by the robot is calculated and executed using the motor system. For more information, please refer to here

Kismet exhibits several human-like behaviours, which are modeled on the behaviour of an infant, such as moving closer towards an object it is interested in by moving its neck or engaging in a calling behaviour to cause the object to move nearer. It also changes its facial expressions according to whether the visual and audio stimuli are causing it to "feel" happy, sad, etc. Videos of these interactions can be seen here. The use of infant behaviours are meant to simulate parent-infant exchanges to simulate socially situated learning with a human instructor.

Kismet represents the next step in human-robot interaction, where robots will share a similar morphology to humans and thus communicate in a manner that supports the natural mode of communication of humans. This will lead to more intuitive and "friendly" designs for robots that will allow them to be more easily accepted by humans as robots become more and more ubiquitous in our lives.

Link to Kismet homepage

Kismet exhibits several human-like behaviours, which are modeled on the behaviour of an infant, such as moving closer towards an object it is interested in by moving its neck or engaging in a calling behaviour to cause the object to move nearer. It also changes its facial expressions according to whether the visual and audio stimuli are causing it to "feel" happy, sad, etc. Videos of these interactions can be seen here. The use of infant behaviours are meant to simulate parent-infant exchanges to simulate socially situated learning with a human instructor.

Kismet represents the next step in human-robot interaction, where robots will share a similar morphology to humans and thus communicate in a manner that supports the natural mode of communication of humans. This will lead to more intuitive and "friendly" designs for robots that will allow them to be more easily accepted by humans as robots become more and more ubiquitous in our lives.

Link to Kismet homepage

5 comments:

u0205081 Chow Synn Nee

It's amazing how robots can evolve to such a stage where the likes of Doraemon are becoming not just a fantasy( by that, i mean robots can now have autonomous facial expression). However, i guess much still has to be done on the appearance of the robot as it seems quite "scary-looking". oops~

I've also noticed that the "eyebrows" were not part of the features that were used to display emotions while I've always thought that eyebrows are very effective in showing emotions; take for example, a frown showing disagreement or a raised eyebrow showing disbelief. Perhaps the eyelids, ears and lips are already sufficient, hmm..

One application of this robot might be for it to become a home tutor for language tutoring since it has a vocalization system generated through an articulatory synthesizer and multilingualism should not be a problem for robots and when the student gets bored or is resistive during learning, the robot might even "coax" the student into listening to it teach! hmm.. Do i see Doraemon in the making?

U0303270 Quak Yeok Teck

This is interesting, paving the way for future development of humanoids, like those we see in "I, Robot". As Synn Nee has pointed out, the eyebrows are static, which could add to the expression possibilities. Do I sense that one day cheeks be added in to create even more expressions?

u0204779 Pang Sze Yong

I think when Synn Nee pointed out that the robot's appearance is scary, we can contrast that reaction to meeting another person with not-so-good-looking features (I'm trying not to use harsh words here, a rather sensitive thing to talk about and by the way I also agree the robot looks scary).

I believe most people when interacting with people with above-mentioned features would tend not to think of them as 'scary' and thus we can see a difference in attitude towards fellow humans and robots.

Robots, are not natural entities and I think that contributes to our lack of respect for them. It brings to mind movies like A.I, iRobot, The Matrix and the Matrix-themed animation Animatrix, where the robots have been given thinking abilities like humans and they consider themselves worthy of respect but respect is not duly shown towards them by their human creators, sparking off conflict and struggle between humans and robots.

If robotics will advance to such a state as in the movies, will our attitudes towards them also advance just as quickly? Or will these science-fiction movies become reality?

U0307641 Low Youliang Freddy

I believe that other than communicating with humans with the facial expressions, the important thing that this robot brought about was the recognition of emotions. This could be useful for future industries such as in the service sector that utilizes robots. Facial recogition is useful for many fields as more functions are integrated into the robot.

U0205231 Lim Xiaoping

The ability of robots such as Kismet to take in and interpret visual and audio cues from the human interacting with it is very impressive and such technology can no doubt open up a wide range of possibilities for other applications.

For instance, I have read about an interesting application of such an emotion recognition program is the "emotional social intelligence prosthetic" device that is designed by the Media Lab at MIT. By using just a few seconds of video footage, it is able to detect whether the human in the footage is agreeing, disagreeing, concentrating, thinking, unsure or interested, a further extension from the six more basic emotional states of happiness, sadness, anger, fear, surprise and disgust commonly detected.

This information can be very useful to people with autism to relate to the person they are talking with as one of the major problems of autistic people is that they are not as apt as normal people in picking up social cues. Hence this system can serve to alert the autistic user when the person they are talking to is showing signs of being bored or annoyed.

Post a Comment